学习用的hadoop容器(中)

承接上文,这篇文章要把container里面的hadoop配置好,让它可以正常运行。首先是进入到容器里面,查看它的hostname:

sh-4.1# hostname

5660bba0258f

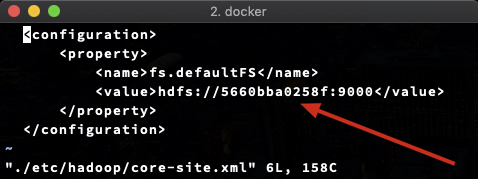

然后在core-site.xml里面更新hostname的配置:

sh-4.1# pwd

/usr/local/hadoop

sh-4.1# find . | grep core-site

./share/hadoop/common/templates/core-site.xml

./etc/hadoop/core-site.xml

./etc/hadoop/core-site.xml.template

./input/core-site.xml

这样hdfs的侦听地址可以保证是正确的。然后重新启动sshd服务:

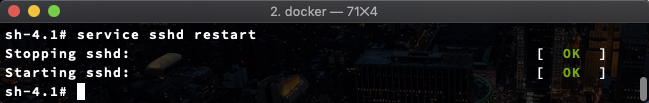

sh-4.1# service sshd restart

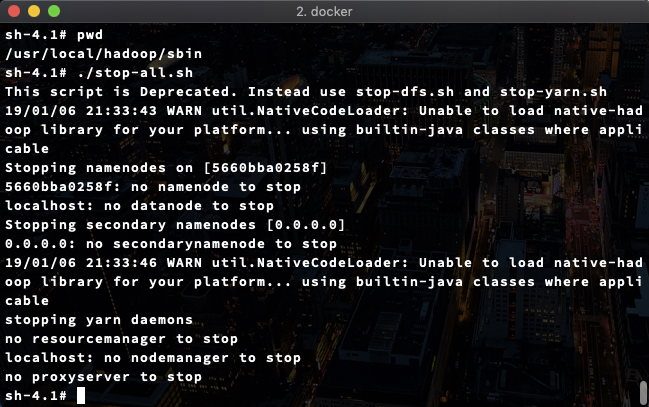

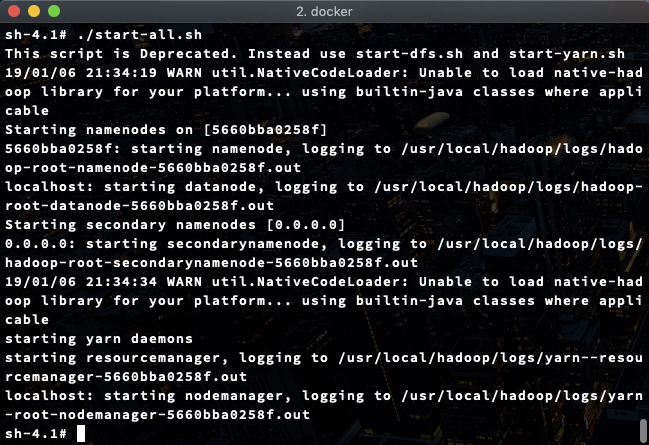

接下来是重启hadoop的服务:

sh-4.1# pwd

/usr/local/hadoop/sbin

这样,hadoop就已经正常运行了。

sh-4.1# cd /root/hadoop-book

接下来就是可以试试看执行hadoop任务,来跑一跑hadoop-book里面带的例子。首先是拷贝数据:

sh-4.1# $HADOOP_PREFIX/bin/hadoop fs -copyFromLocal /root/hadoop-book/input /input2

sh-4.1# $HADOOP_PREFIX/bin/hadoop fs -ls /input/ncdc/sample.txt

19/01/06 21:56:07 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

-rw-r--r-- 1 root supergroup 529 2019-01-06 21:55 /input/ncdc/sample.txt

$HADOOP_PREFIX/bin/hadoop jar /root/hadoop-book/ch02-mr-intro/target/ch02-mr-intro-4.0.jar MaxTemperature /input/ncdc/sample.txt /output

执行过程:

执行结果如下:

sh-4.1# $HADOOP_PREFIX/bin/hadoop fs -ls /output

。。。

-rw-r--r-- 1 root supergroup 0 2019-01-06 21:59 /output/_SUCCESS

-rw-r--r-- 1 root supergroup 17 2019-01-06 21:59 /output/part-r-00000

sh-4.1# $HADOOP_PREFIX/bin/hadoop fs -cat /output/part-r-00000

。。。

1949 111

1950 22

以上就是这个容器的配置和使用过程,在本文的下篇,我会把这个容器制作成Dockerfile,放在github上面供大家使用。

- 上一篇 学习用的hadoop容器(上)

- 下一篇 学习用的hadoop容器(下)