Using eGPU and Tensorflow on MacOS

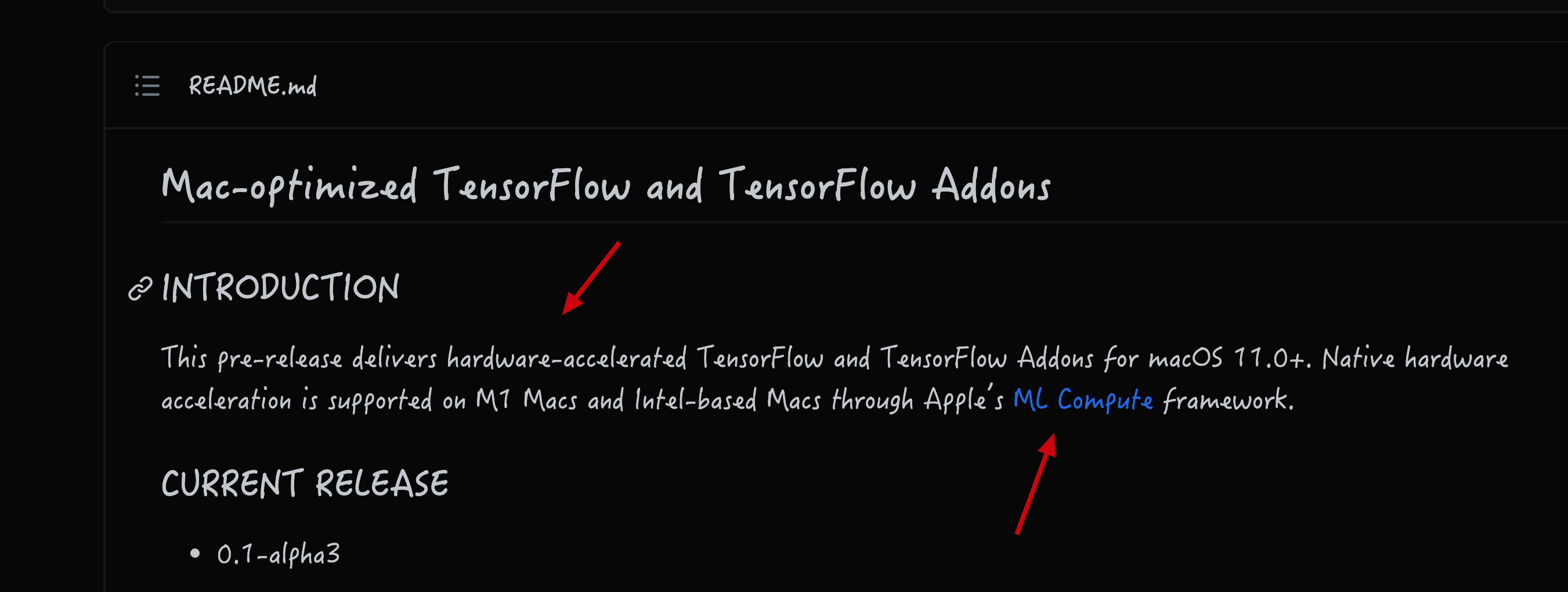

MacOS starts to support Tensorflow officially recently, and here is the project link:

From the above project introduction we can see that it uses its ML Computer framework to support hardware-accelerated Tensorflow natively. I just have a eGPU by my side:

It’s a BlackMagic eGPU box and it contains an AMD Radeon Pro 580 card inside:

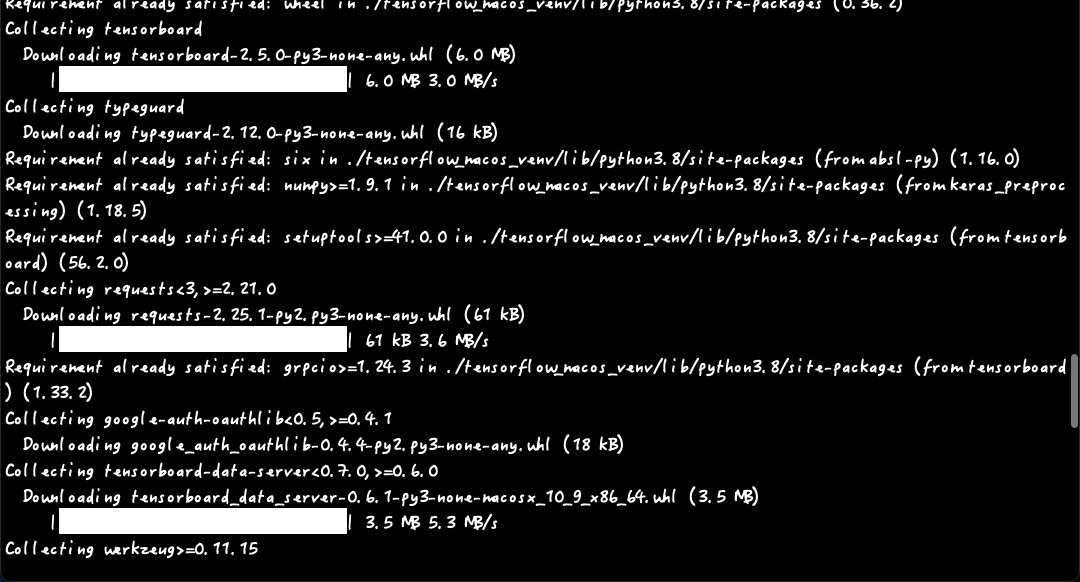

So I followed the installation instruction on above tensorflow_macos GitHub project page and setup my local environment for use. Here is part of the installation process:

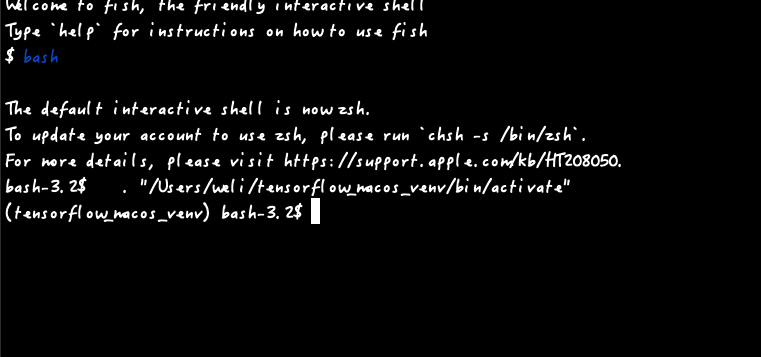

After installed it at my working directory ~/tensorflow_macos_venv/, I entered the virtual environment:

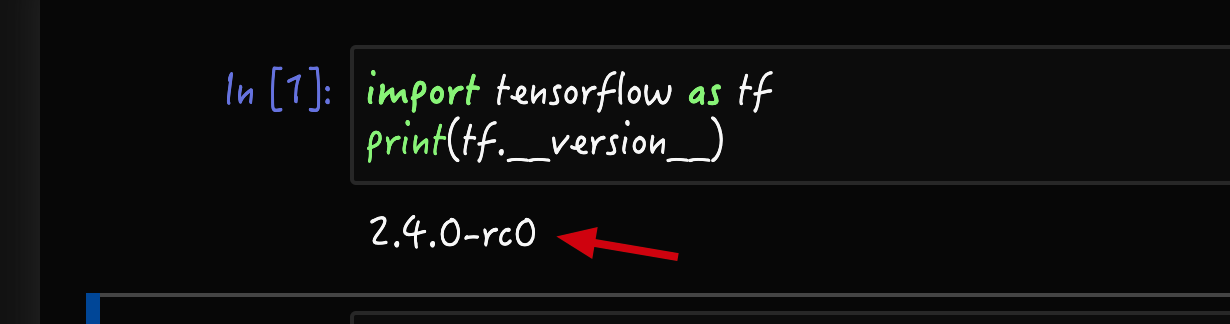

In above virtual environment, I installed jupyter for coding and start jupyter-notebook to enter some test code:

From above code we can see that the tensorflow version is 2.4.0-rc0. Then I used the code here for testing:

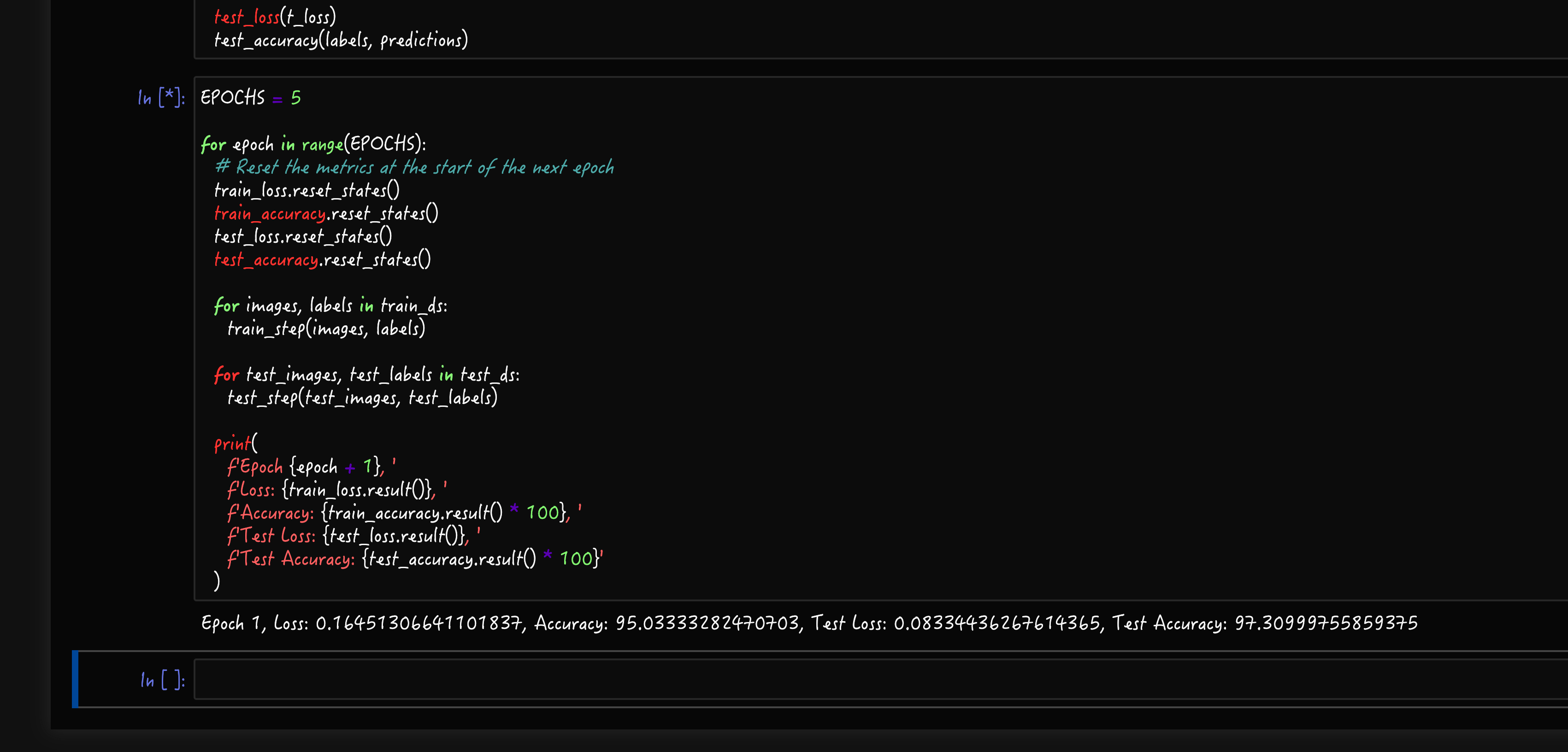

And here is the testing result:

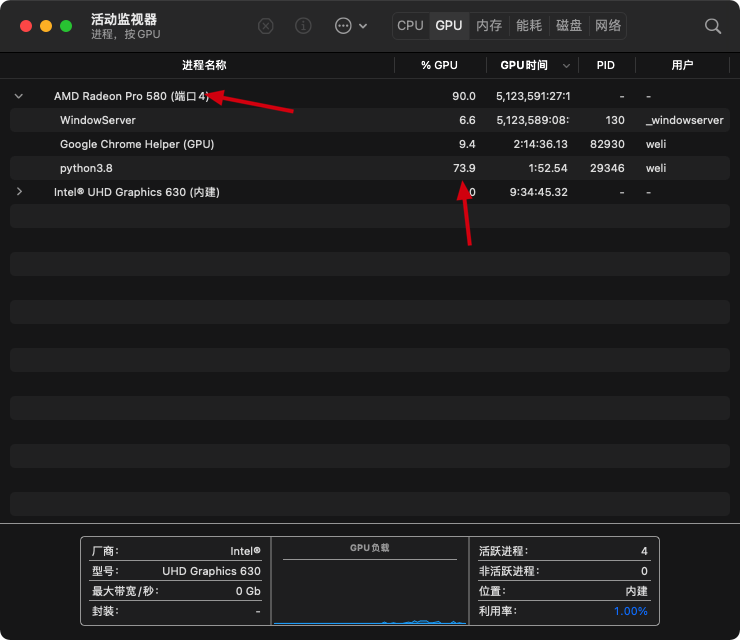

During the code running process, I can see that the code is actually running on my eGPU:

Which means MacOS is now officially supporting hardware accelerated Machine Learning framework.

To see the difference on running performance on GPU vs. CPU, we can use this project for demonstration:

The advantage of this project is that you can select a hardware for running your Machine Learning code. It supports several ML frameworks out-of-box:

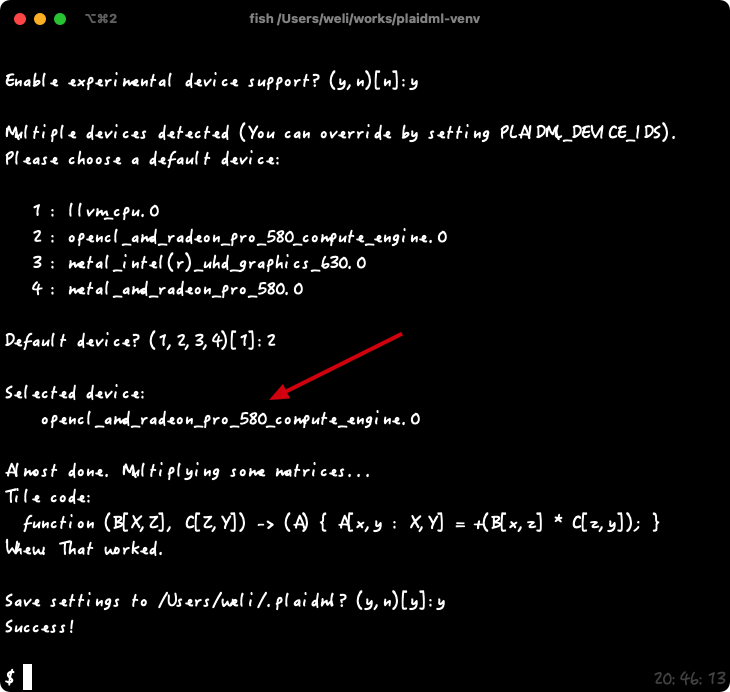

After installing the above framework and setup the virtual environment properly. I run the plaid-setup command it provides to select the hardware to use for running ML code:

As we can see there are several hardwares I can select on my computer. First one is by default CPU, and others are my integrated Intel graphic card and outer eGPU AMD card.

Moreover, for the eGPU, there are two options to select, one is to use the OpenCL layer and the other is to use the bare metal support of hardware.

After selecting devices one by one, I run the plaidbench keras mobilenet command to testing the performance with its Keras support running mobilenet model.

Here are the results of all the devices one by one:

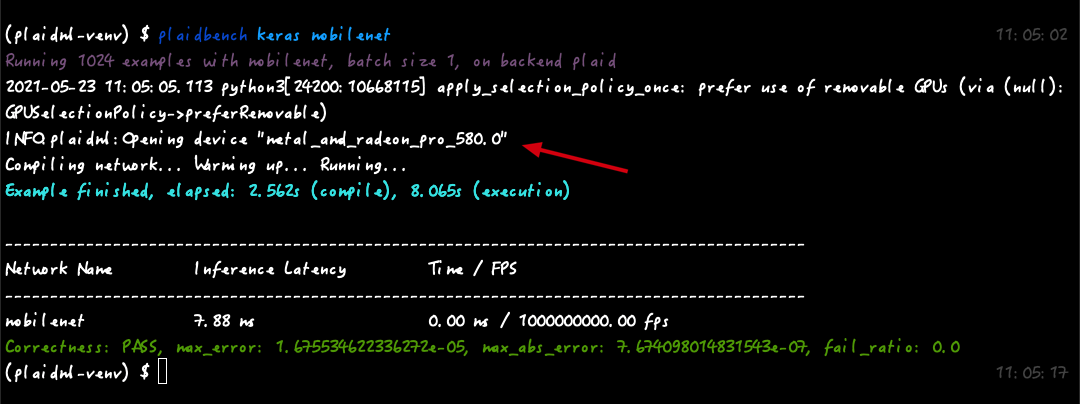

From above the first result is running on bare metal external GPU card, which the tests used around 8 seconds to finish. Then it’s the result running on same external AMD card running with OpenCL layer:

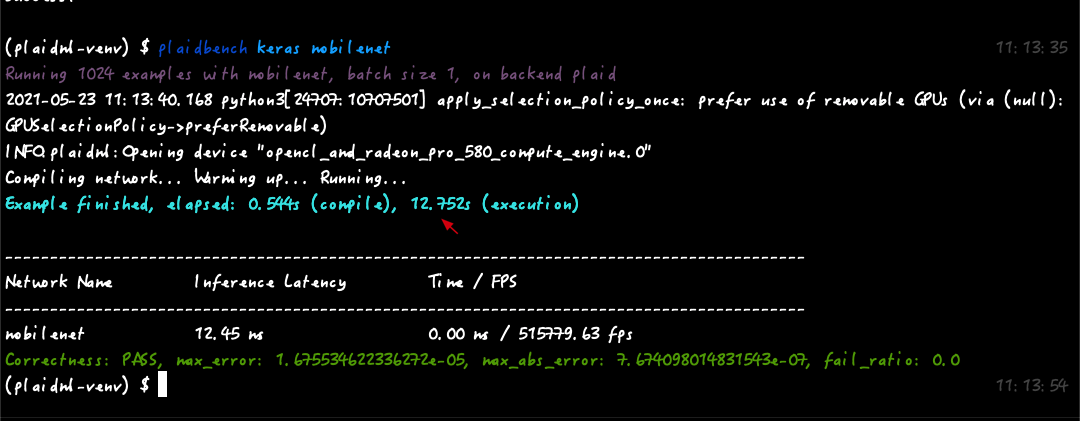

From above we can see that it used more time running the test, which is around 12 seconds. The third test is running on integrated intel display card:

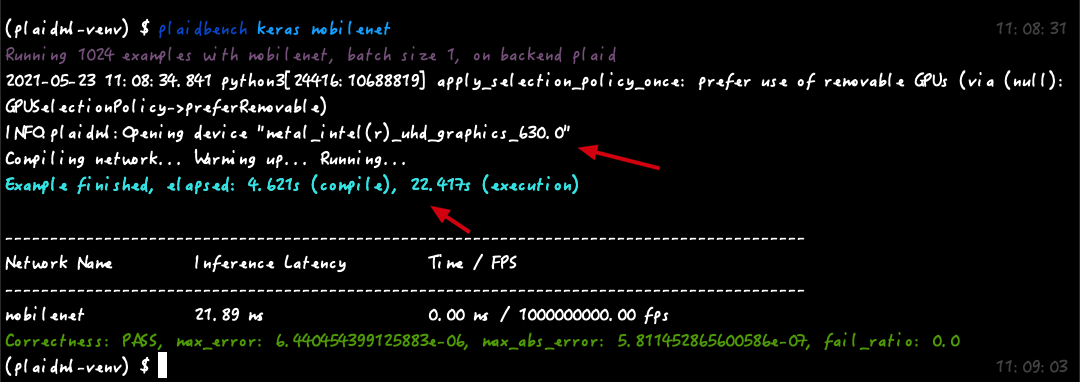

It used around 22 seconds finished testing. The last one is to run the test directly on the CPU:

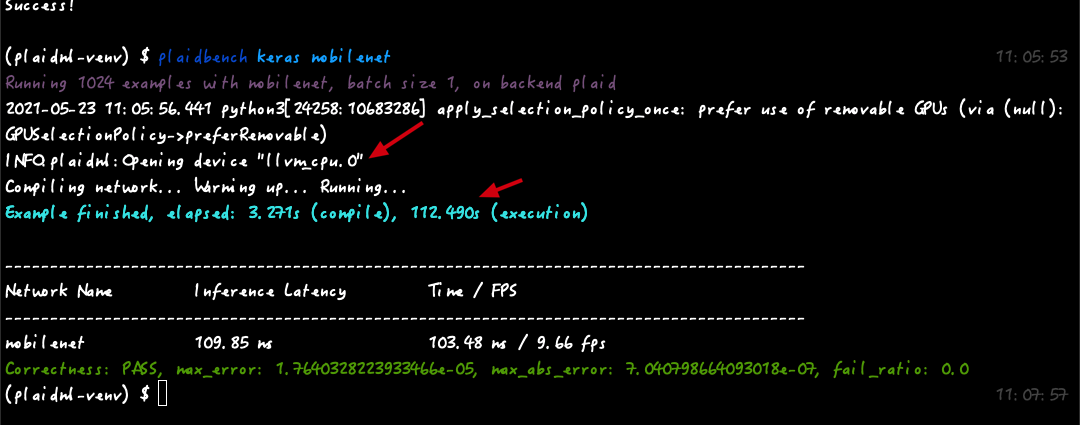

We can see the result is dramatically slower than using a GPU. It is around 110 seconds finish tests, which is 10 times more slower than running on bare metal eGPU.

In addition, here is a list of the eGPU devices Apple currently supports:

And as Apple is migrating to Arm structure, we may see the potential that using MacOS as the machine learning platform in future.